INTRODUCTION

Information systems were developed in early 1960s to process orders, billings, inventory controls, payrolls, and accounts payables. Soon information systems research began. Harry Stern started the "Information Systems in Management Science" column in Management Science journal to provide a forum for discussion beyond just research papers (Banker & Kauffman, 2004). Ackoff (1967) led the earliest research on management information systems for decision-making purposes and published it in Management Science. Gorry and Scott Morton (1971) first used the term 'decision support systems' (DSS) in a paper and constructed a framework for improving management information systems. The topics on information systems and DSS research diversifies. One of the major topics has been on how to get systems design right.

As an active component of DSS, which is part of today's business intelligence systems, data warehousing became one of the most important developments in the information systems field during the mid-to-late 1990s. Since business environment has become more global, competitive, complex, and volatile, customer relationship management (CRM) and e-commerce initiatives are creating requirements for large, integrated data repositories and advanced analytical capabilities. By using a data warehouse, companies can make decisions about customer-specific strategies such as customer profiling, customer segmentation, and cross-selling analysis (Cunningham et al., 2006). Thus how to design and develop a data warehouse have become important issues for information systems designers and developers.

This paper presents some of the currently discussed development and design methodologies in data warehousing, such as the multidimensional model vs. relational ER model, CIF vs. multidimensional methodologies, data-driven vs. metric-driven approaches, top-down vs. bottom-up design approaches, data partitioning and parallel processing.

BACKGROUND

Data warehouse design is a lengthy, time-consuming, and costly process. Any wrongly calculated step can lead to a failure. Therefore, researchers have placed important efforts to the study of design and development related issues and methodologies.

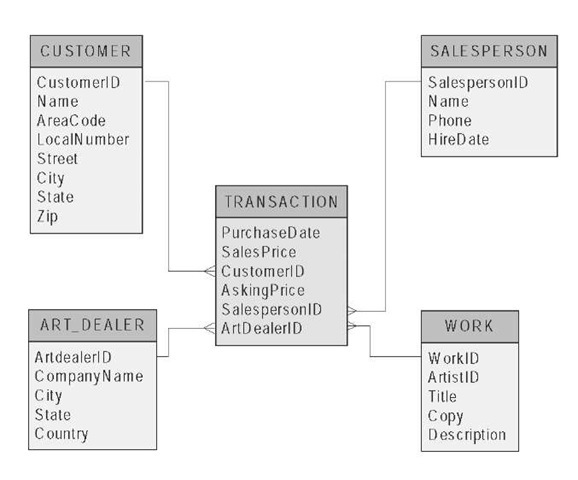

Data modeling for a data warehouse is different from operational database data modeling. An operational system, e.g., online transaction processing (OLTP), is a system that is used to run a business in real time, based on current data. An OLTP system usually adopts Entity-relationship (ER) modeling and application-oriented database design (Han & Kamber, 2006). An information system, like a data warehouse, is designed to support decision making based on historical point-in-time and prediction data for complex queries or data mining applications (Hoffer, et al., 2007). A data warehouse schema is viewed as a dimensional model (Ahmad et al., 2004, Han & Kamber, 2006; Levene & Loizou, 2003). It typically adopts either a star or snowflake schema and a subject-oriented database design (Han & Kamber, 2006). The schema design is the most critical to the design of a data warehouse.

Many approaches and methodologies have been proposed in the design and development of data warehouses. Two major data warehouse design methodologies have been paid more attention. Inmon et al. (2000) proposed the Corporate Information Factory (CIF) architecture. This architecture, in the design of the atomic-level data marts, uses denormalized entity-relationship diagram (ERD) schema. Kimball (1996, 1997) proposed multidimensional (MD) architecture.

This architecture uses star schema at atomic-level data marts. Which architecture should an enterprise follow? Is one better than the other?

Currently, the most popular data model for data warehouse design is the dimensional model (Han & Kamber, 2006; Bellatreche, 2006). Some researchers call this model the data-driven design model. Artz (2006), nevertheless, advocates the metric-driven model, which, as another view of data warehouse design, begins by identifying key business processes that need to be measured and tracked over time in order for the organization to function more efficiently. There has always been the issue of top-down vs. bottom-up approaches in the design of information systems. The same is with a data warehouse design. These have been puzzling questions for business intelligent architects and data warehouse designers and developers. The next section will extend the discussion on issues related to data warehouse design and development methodologies.

DESIGN AND DEVELOPMENT METHODOLOGIES

Data Warehouse Data Modeling

Database design is typically divided into a four-stage process (Raisinghani, 2000). After requirements are collected, conceptual design, logical design, and physical design follow. Of the four stages, logical design is the key focal point of the database design process and most critical to the design of a database. In terms of an OLTP system design, it usually adopts an ER data model and an application-oriented database design (Han & Kamber, 2006). The majority of modern enterprise information systems are built using the ER model (Raisinghani, 2000). The ER data model is commonly used in relational database design, where a database schema consists of a set of entities and the relationship between them. The ER model is used to demonstrate detailed relationships between the data elements. It focuses on removing redundancy of data elements in the database. The schema is a database design containing the logic and showing relationships between the data organized in different relations (Ahmad et al., 2004). Conversely, a data warehouse requires a concise, subject-oriented schema that facilitates online data analysis. A data warehouse schema is viewed as a dimensional model which is composed of a central fact table and a set of surrounding dimension tables, each corresponding to one of the components or dimensions ofthe fact table (Levene & Loizou, 2003). Dimensional models are oriented toward a specific business process or subject. This approach keeps the data elements associated with the business process only one join away. The most popular data model for a data warehouse is multidimensional model. Such a model can exist in the form of a star schema, a snowflake schema, or a starflake schema.

The star schema (see Figure 1) is the simplest database structure containing a fact table in the center, no redundancy, which is surrounded by a set of smaller dimension tables (Ahmad et al., 2004; Han & Kamber, 2006). The fact table is connected with the dimension tables using many-to-one relationships to ensure their hierarchy. The star schema can provide fast response time allowing database optimizers to work with simple database structures in order to yield better execution plans.

The snowflake schema (see Figure 2) is a variation of the star schema model, in which all dimensional information is stored in the third normal form, thereby further splitting the data into additional tables, while keeping fact table structure the same. To take care of hierarchy, the dimension tables are connected with sub-dimension tables using many-to-one relationships. The resulting schema graph forms a shape similar to a snowflake (Ahmad et al., 2004; Han & Kamber, 2006). The snowflake schema can reduce redundancy and save storage space. However, it can also reduce the effectiveness of browsing and the system performance may be adversely impacted. Hence, the snowflake schema is not as popular as star schema in data warehouse design (Han & Kamber, 2006). In general, the star schema requires greater storage, but it is faster to process than the snowflake schema (Kroenke, 2004).

The starflake schema (Ahmad et al., 2004), also called galaxy schema or fact constellation schema (Han & Kamber, 2006), is a combination of the de-normalized star schema and the normalized snowflake schema (see Figure 3). The starflake schema is used in situations where it is difficult to restructure all entities into a set of distinct dimensions. It allows a degree of crossover between dimensions to answer distinct queries (Ahmad et al., 2004). Figure 3 illustrates the starflake schema.

What needs to be differentiated is that the three schemas are normally adopted according to the differences of design requirements. A data warehouse collects information about subjects that span the entire organization, such as customers, items, sales, etc. Its scope is enterprise-wide (Han & Kamber, 2006). Starflake schema can model multiple and interrelated subjects. Therefore, it is usually used to model an enterprise-wide data warehouse. A data mart, on the other hand, is similar to a data warehouse but limits its focus to a department subject of the data warehouse. Its scope is department-wide. The star schema and snowflake schema are geared towards modeling single subjects. Consequently, the star schema or snowflake schema is commonly used for a data mart modeling, although the star schema is more popular and efficient (Han & Kamber, 2006).

Figure 1. Example of a star schema

Figure 2. Example of a snowflake schema

Figure 3. Example of a starflake schema

CIF vs. Multidimensional

Two major design methodologies have been paid more attention in the design and development of data warehouses. Kimball (1996, 1997) proposed multidimensional (MD) architecture. Inmon, Galemmco, and Geiger (2000) proposed the Corporate Information Factory (CIF) architecture. Imhoff et al. (2004) made a comparison between the two by using important criteria, such as scope, perspective, data flow, etc. One of the most significant differences between the CIF and MD architectures is the definition of data mart. For MD architecture, the design of the atomic-level data marts is significantly different from the design of the CIF data warehouse, while its aggregated data mart schema is approximately the same as the data mart in the CIF architecture. MD architecture uses star schemas, whereas CIF architecture uses denormalized ERD schema. This data modeling difference constitutes the main design difference in the two architectures (Imhoff et al., 2004). A data warehouse may need both types of data marts in the data warehouse bus architecture depending on the business requirements. Unlike the CIF architecture, there is no physical repository equivalent to the data warehouse in the MD architecture.

The design of the two data marts is predominately multidimensional for both architecture, but the CIF architecture is not limited to just this design and can support a much broader set of data mart design techniques. In terms of scope, both architectures deal with enterprise scope and business unit scope, with CIF architecture putting a higher priority on enterprise scope and MD architecture placing a higher priority on business unit scope. Imhoff et al. (2004) encourage the application of a combination of the data modeling techniques in the two architectural approaches, namely, the ERD or normalization techniques for the data warehouse and the star schema data model for multidimensional data marts. A CIF architecture with only a data warehouse and no multidimensional marts is almost useless and a multidimensional data-mart-only environment risks the lack of an enterprise integration and support for other forms of business intelligence analyses.

Data-Driven vs. Metric-Driven

Currently, the most popular data model for data warehouse design is the dimensional model (Han & Kamber, 2006; Bellatreche, 2006). In this model, data from OLTP systems are collected to populated dimensional model. Researchers term a data warehouse design based on this model as a data-driven design model since the information acquisition processes in the data warehouse are driven by the data made available in the underlying operational information systems. Another view of data warehouse design is called the metric-driven view (Artz, 2006), which begins by identifying key business processes that need to be measured and tracked over time in order for the organization to function more efficiently. Advantages of data-driven model include that it is more concrete, evolutionary, and uses derived summary data. Yet the information generated from the data warehouse may be meaningless to the user owing to the fact that the nature of the derived summary data from OLTP systems may not be clear. The metric-driven design approach, on the other hand, begins first by defining key business processes that need to be measured and tracked over time. After these key business processed are identified, then they are modeled in a dimensional data model. Further analysis follows to determine how the dimensional model will be populated (Artz, 2006).

According to Artz (2006), data-driven model to a data warehouse design has little future since information derived from a data-driven model is information about the data set. Metric-driven model, conversely, is possibly to have some key impacts and implications because information derived from a metric-driven model is information about the organization. Data-driven approach is dominating data warehouse design in organizations at present. Metric-driven, on the other hand, is at its research stage, needing practical application testimony of its speculated potentially dramatic implications.

Top-Down vs. Bottom-Up

There are two approaches in general to building a data warehouse prior to the data warehouse construction commencement, including data marts: the top-down approach and bottom-up approach (Han & Kamber, 2006; Imhoff et al., 2004; Marakas, 2003). Top-down approach starts with a big picture of the overall, enterprise-wide design. The data warehouse to be built is large and integrated, with a focus on integrating the enterprise data for usage in any data mart from the very first project (Imhoff et al., 2004). It implies a strategic rather than an operational perspective of the data. It serves as the proper alignment of an organization's information systems with its business goals and objectives (Marakas, 2003). However, this approach is risky (Ponniah, 2001). In contrast, a bottom-up approach is to design the warehouse with business-unit needs for operational systems. It starts with experiments and prototypes (Han & Kamber, 2006). With bottom-up, departmental data marts are built first one by one. It offers faster and easier implementation, favorable return on investment, and less risk of failure, but with a drawback of data fragmentation and redundancy. The focus of bottom-up approach is to meet unit-specific needs with minimum regards to the overall enterprise-wide data requirements (Imhoff et al, 2004).

An alternative to the above-discussed two approaches is to use a combined approached (Han & Kamber, 2006), with which "an organization can exploit the planned and strategic nature of the top-down approach while retaining the rapid implementation and opportunistic application of the bottom-up approach" (p. 129), when such an approach is necessitated in the undergoing organizational and business scenarios.

Data Partitioning and Parallel Processing

Data partitioning is the process of decomposing large tables (fact tables, materialized views, indexes) into multiple small tables by applying the selection operators (Bellatreche, 2006). A good partitioning scheme is an essential part of designing a database that will benefit from parallelism (Singh, 1998). With a well performed partitioning, significant improvements in availability, administration, and table scan performance can be achieved.

Parallel processing is based on a parallel database, in which multiprocessors are in place. Parallel databases link multiple smaller machines to achieve the same throughput as a single, larger machine, often with greater scalability and reliability than single processor databases (Singh, 1998). In a context of relational online analytical processing (ROLAP), by partitioning data of ROLAP schema (star schema or snowflake schema) among a set of processors, OLAP queries can be executed in a parallel, potentially achieving a linear speedup and thus significantly improving query response time (Datta et al., 1998; Tan, 2006). Given the size of contemporary data warehousing repositories, multiprocessor solutions are crucial for the massive computational demands for current and future OLAP system (Dehne et al., 2006). The assumption of most of the fast computation algorithms is that their algorithms can be applied into the parallel processing system (Dehne, 2006; Tan, 2006). As a result, it is sometimes necessary to use parallel processing for data mining because large amounts of data and massive search efforts are involved in data mining (Turban et al., 2005). Therefore, data partitioning and parallel processing are two complementary techniques to achieve the reduction of query processing cost in data warehousing design and development (Bellatreche, 2006).

FUTURE TRENDS

Currently, data warehousing is largely applied in customer relationship management (CRM). However, there are up to date no agreed upon standardized rules for how to design a data warehouse to support CRM and a taxonomy of CRM analyses needs to be developed to determine factors that affect design decisions for CRM data warehouse (Cunningham et al., 2006).

In data modeling area, to develop a more general solution for modeling data warehouse current ER model and dimensional model need to be extended to the next level to combine the simplicity of the dimensional model and the efficiency of the ER model with the support of object oriented concepts.

CONCLUSION

Several data warehousing development and design methodologies have been reviewed and discussed. Data warehouse data model differentiates itself from ER model with an orientation toward specific business purposes. It benefits an enterprise greater if the CIF and MD architectures are both considered in the design of a data warehouse. Some of the methodologies have been practiced in the real world and accepted by today's businesses. Yet new challenging methodologies, particularly in data modeling and models for physical data warehousing design, such as the metric-driven methodology, need to be further researched and developed.

KEY TERMS

Dimensions: They are the perspectives or entities with respect to which an organization wants to keep records (Han & Kamber, 2006, p. 110).

Dimensional Model: A model containing a central fact table and a set of surrounding dimension tables, each corresponding to one of the components or dimensions of the fact table.

Entity-Relationship Data Model: A model that represents database schema as a set of entities and the relationships among them.

Fact Table: The central table in a star schema, containing the names of the facts, or measures, as well as keys to each of the related dimension tables.

Metric-Drive Design: A data warehousing design approach which begins by defining key business processes that need to be measured and tracked over time. Then they are modeled in a dimensional model.

Parallel Processing: The allocation of the operating system's processing load across several processors (Singh, 1998, p. 209).

Star Schema: A modeling diagram which contains a large central table (fact table) and a set of smaller attendant tables (dimension tables) each represented by only one table with a set of attributes.