INTRODUCTION

Annotated data have recently become more important, and thus more abundant, in computational linguistics . They are used as training material for machine learning systems for a wide variety of applications from Parsing to Machine Translation (Quirk et al., 2005). Dependency representation is preferred for many languages because linguistic and semantic information is easier to retrieve from the more direct dependency representation. Dependencies are relations that are defined on words or smaller units where the sentences are divided into its elements called heads and their arguments, e.g. verbs and objects. Dependency parsing aims to predict these dependency relations between lexical units to retrieve information, mostly in the form of semantic interpretation or syntactic structure.

Parsing is usually considered as the first step of Natural Language Processing (NLP). To train statistical parsers, a sample of data annotated with necessary information is required. There are different views on how informative or functional representation of natural language sentences should be. There are different constraints on the design process such as: 1) how intuitive (natural) it is, 2) how easy to extract information from it is, and 3) how appropriately and unambiguously it represents the phenomena that occur in natural languages.

In this article, a review of statistical dependency parsing for different languages will be made and current challenges of designing dependency treebanks and dependency parsing will be discussed.

DEPENDENCY GRAMMAR

The concept of dependency grammar is usually attributed to Tesniere (1959) and Hays (1964). The dependency theory has since developed, especially with the works of Gross (1964), Gaiffman (1965), Robinson (1970), Mel'cuk (1988), Starosta (1988), Hudson (1984, 1990), Sgall et al. (1986), Barbero et al. (1998), Duchier (2001), Menzel and Schroder (1998), Kruijff (2001).

Dependencies are defined as links between lexical entities (words or morphemes) that connect heads and their dependants. Dependencies may have labels, such as subject, object, and determiner or they can be unla-belled. A dependency tree is often defined as a directed, acyclic graph of links that are defined between words in a sentence. Dependencies are usually represented as trees where the root of the tree is a distinct node. Sometimes dependency links cross. Dependency graphs of this type are non-projective. Projectivity means that in surface structure a head and its dependants can only be separated by other dependants of the same head (and dependants of these dependants). Non-projec-tive dependency trees cannot be translated to phrase structure trees unless treated specially. We can see in Table 1 that the notion of non-projectivity is very common across languages although distribution of it is usually rare in any given language. The fact that it is rare does not make it less important because it is this kind of phenomena that makes natural languages more interesting and that makes all the difference in the generative capacity of a grammar that is suggested to explain natural languages.

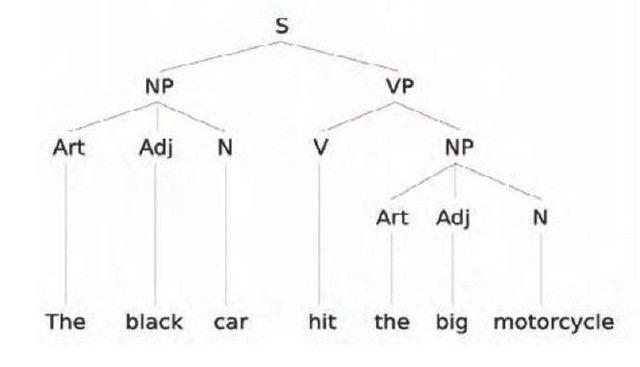

An example dependency tree is in Figure 1. The corresponding phrase structure tree is shown in Figure 2. The ROOT of this tree is "hit".

Given the basic concept of dependency, different theories of dependency grammar exist. Among many well known are: Functional Generative Description (Sgall et al., 1969, 1986), (Petkevic, 1987, 1995), Dependency Unification Grammar (DUG) Hellwig (1986, 2003), Meaning Text Theory (Gladkij and Mel'cuk, 1975), (Mel'cuk, 1988) and Lexicase (Starosta, 1988), Topological Dependency Grammar (Gerdes and Kah-ane, 2001). Kruijff (2001) also suggests a type of logic for dependency grammar, "Dependency Grammar Logic" which aims transparent semantic interpretation during parsing.

There are many open issues regarding the representation of dependency structure. Hays (1964) and Gaifman (1965) take dependency grammars as special cases of phrase structure grammars whereas Barbero et al. (1998), Menzel and Schroder (1998), Eisner (2000), Samuelsson (2000), Duchier (2001), Gerdes and Kahane (2001), Kruijff (2001) think they are completely different.

Figurel. Dependency Tree for the sentence "The red car hit the big motorcycle"

Figure 2. Phrase Structure Tree forthe sentence in Figure 1

Generative capacity of dependency grammars has long been discussed (Gross, 1964), (Hays, 1964), (Gaifman, 1965), (Robinson, 1970). Dependency grammars were proved to be context-free (Gaiffman, 1965). When natural languages were proved to be not context-free, but in a class called "Mildly Context-Sensitive" (Joshi, 1985) they were abandoned until 90s, when Vijayashanker and Weir (1994) showed that Head Grammars -an extension of CFGs- (Pollard, 1984) are mildly context-sensitive like Tree Adjoining Grammar (TAG), (Joshi et al., 1975) and Combinatory Categorial Grammar (CCG), (Steedman,2000). Recently, Kuhlmann and Mohl (2007) defined "regular dependency languages" and showed that applying different combinations of gap-degree and well-nestedness restrictions on non-projectivity in these languages gave a class of mildly context-sensitive grammars.

DEPENDENCY TREEBANKS

Why Dependency Trees?

Many new corpora have been designed and created in the past few years. Dependency representation is preferred when these corpora are designed. This can be argued by the following properties of dependency trees:

1. They are easier to annotate than some other representation types like phrase structure trees (PST). There are fewer tags and labels (only as many as words in a sentence) and no internal nodes to name the phrases as in PSTs.

2. Some information such as predicate-argument structure can be extracted trivially from them which is not the case for PSTs.

3. Another interesting result is that some dependency parsers run much faster than PST parsers. Computational complexity of a standard PST parser is O(n5) whereas a non-projective DT parser runs in O(n2).

Dependency Treebanks

Table 1 compares dependency corpora of 19 languages1. This information is gathered from CoNLL-X and CoNNL 2007 shared tasks on dependency parsing. The reader is referred to Buchholz and Marsi (2006) and Nivre et al. (2007) for more information on dependency treebanks included in the tasks. Although, the underlying theory is the same in all of these treebanks there are maj or differences in the outcome that originate from the questions like 1) how much information is needed to put in the dependency trees, 2) how strongly interlaced the different modules such as morphology syntax are in a language. For instance, Czech treebank (Bohmova et al., 2003) has 3 different levels of representation, namely, morphological, grammatical and tecto-grammatical layers. Morphology-syntax interface in Turkish makes word-based dependencies inappropriate (^akici, 2008). Therefore, dependencies are between morphological sub-groups called inflectional groups (IG) rather than words. These are two arguments among many on why it is very important to make a good feasibility study when designing a dependency treebank as different aspects of languages require different treatment.

DEPENDENCY PARSING

Statistical or data-driven parsing methods have gained more focus with the continuous introduction of new linguistic data. Parsing was more focused on training and parsing with phrase structure trees and specifically English language because the Penn Treebank (Marcus et al., 1993) was the only available source for a long time. With the introduction of treebanks of different languages it is now possible to explore the bounds of multilingual parsing.

Table 1. Treebank information; #T = number of tokens * 1000, #S = number of sentences * 1000, #T/#S = tokens per sentence, %NST = % of non-scoring tokens (only in CoNLL-X), %NPR = % of non-projective relations, %NPS = % of non-projective sentences, IR = has informative root labels

| Language | #T | #S | #T/#S | %NST | %NPR | %NPS | IR | |||||||

| Arabic | 54 | 112 | 1.5 | 2.9 | 37.2 | 38.3 | 8.8 | - | 0.4 | 0.4 | 11.2 | 10.1 | Y | - |

| Basque | - | 51 | - | 3.2 | - | 38.3 | - | - | - | 2.9 | - | 26.2 | - | - |

| Bulgarian | 190 |

| 12.8 | - | 14.8 | - | 14.4 | - | 0.4 | - | 5.4 | - | N | - |

| Catalan | - | 431 | - | 15 | - | 28.8 | - | - | - | 0.1 | - | 2.9 | - | - |

| Chinese | 337 | 337 | 57 | 57 | 5.9 | 5.9 | 0.8 | - | 0.0 | 0.0 | 0.0 | 0.0 | N | - |

| Czech |

| 432 | 72.7 | 25.4 | 17.2 | 17.0 | 14.9 | - | 1.9 | 1.9 | 23.2 | 23.2 | Y | - |

| Danish | 94 | - | 5.2 | - | 18.2 | - | 13.9 | - | 1.0 | - | 15.6 | - | N | - |

| Dutch | 195 | - | 13.3 | - | 14.6 | - | 11.3 | - | 5.4 | - | 36.4 | - | N | - |

| English | - | 447 | - | 18.6 | - | 24.0 | - | - | - | 0.3 | - | 6.7 | - | - |

| German | 700 | - | 39.2 | - | 17.8 | - | 11.5 | - | 2.3 | - | 27.8 | - | N | - |

| Greek | - | 65 | - | 2.7 | - | 24.2 | - | - | - | 1.1 | - | 20.3 | - | - |

| Hungarian | - | 132 | - | 6.0 | - | 21.8 | - | - | - | 2.9 | - | 26.4 | - | - |

| Italian | - | 71 | - | 3.1 | - | 22.9 | - | - | - | 0.5 | - | 7.4 | - | - |

| Japanese | 151 | - | 17 | - | 8.9 | - | 11.6 | - | 1.1 | - | 5.3 | - | N | - |

| Portuguese | 207 | - | 9.1 | - | 22.8 | - | 14.2 | - | 1.3 | - | 18.9 | - | Y | - |

| Slovene | 29 | - | 1.5 | - | 18.7 | - | 17.3 | - | 1.9 | - | 22.2 | - | Y | - |

| Spanish | 89 | - | 3.3 | - | 27 | - | 12.6 | - | 0.1 | - | 1.7 | - | N | - |

| Swedish | 91 | - | 11 | - | 17.3 | - | 11.0 | - | 1.0 | - | 9.8 | - | N | - |

| Turkish | 58 | 65 | 5 | 5.6 | 11.5 | 11.6 | 33.1 | - | 1.5 | 5.5 | 11.6 | 33.3 | N | - |

The early efforts of data-driven dependency parsing were focused on translating dependency structures to phrase structure trees for which the parsers already existed. But it was realised quickly that doing this was not as trivial as previously thought. It is much more trivial and more intuitive to represent some phenomena with dependency trees rather than phrase structure trees such as local and global scrambling, in other words free word-order. Thus the incompatible translations of dependency structures to phrase structure trees resulted in varying degrees of loss of information.

Collins et al. (1999) reports results on Czech. He translates the dependency trees to phrase structure trees in the flattest way possible and names the internal nodes after part of speech tag of the head word of that node. He uses Model 2 in Collins (1999) and then evaluates the attachment score on the dependencies extracted from the resulting phrase structure trees of his parser. However, crossing dependencies cannot be translated into phrase structure trees (^akici and Baldridge, 2006) unless surface order of the words is changed. But Collins et al. (1999) does not mention crossing dependencies, therefore, we do not know how he handled non-projectivity.

One of the earliest statistical systems that aims parsing dependency structures directly without an internal representation of translation is Eisner (1996). He proposes 3 different generative models. He evaluates them on the dependencies derived from the Wall Street Journal part of the Penn Treebank. Eisner reports 90 percent for probabilistic parsing of English samples from WSJ. He reports 93 percent attachment score when gold standard tags were used, which means 93 percent of all the dependencies are correct regardless of the percentage of the dependencies in each sentence. Eisner's parser is a projective parser thus it cannot inherently predict crossing dependencies.

Discriminative dependency parsers such as Kudo and Matsumoto (2000, 2002), and Yamada and Matsumoto (2003) were also developed. They use support vector machines to predict the next action of a deterministic parser. Nivre et al. (2004) does this by memory-based learning. They are all deterministic parsers.

McDonald et al. (2005b) tried something new and applied graph spanning algorithms to dependency parsing. They formalise dependency parsing as the problem of finding a maximum spanning tree in a directed graph. MIRA is used to determine the weights of dependency links as part of this computation. This algorithm has two major advantages: it runs in O(n2) time and it can handle non-projective dependencies directly. They show that this algorithm significantly improves performance on dependency parsing for Czech, especially on sentences which contain at least one crossed dependency. Variations of this parser has been used in CoNLL-X shared task and received the highest ranking among the participants averaged over the results of all of the 13 languages (Buchholz and Marsi, 2006). However, when no linguistic or global constraints are applied it may yield absurd dependency sequences such as assigning two subjects to a verb (Riedel et al., 2006). McDonald (2005a) uses MIRA learning algorithm with Eisner's parser and reports results for projective parsing.

FUTURE TRENDS

There is growing body of work on creating new tree-banks for different languages. Requirements for the design of these treebanks are at least as diverse as these natural languages themselves. For instance, some languages have a much more strong morphological component or freer word order than others. Understanding and modelling these in the form of annotated linguistic data will guide the understanding of natural language, and technological advancement will hopefully make it easier to understand the inner workings of the language faculty of humans. There are challenges both for dependency parsing and for the dependency theory. For instance, modelling long-distance dependencies and, multiple head dependencies are still awaiting attention and there is much to do on morphemic dependency approach where heads of phrases can be morphemes rather than words in a sentence for morphologically complex languages. Although these constitute a fraction of all the phenomena in natural languages, they are the "tricky" part of the NLP systems that will never be perfect as long as these natural phenomena are ignored.

CONCLUSION

This article has reviewed dependency grammar theory together with recent advances in statistical dependency parsing for different languages. Some current challenges in building dependency treebanks and dependency parsing have also been discussed. Dependency theory and practical applications of dependency representations have advantages and disadvantages. The fact that dependency parsing is easy to adapt to new languages, and is well-adapted to representing free word-order, makes it the preferred representation for many new linguistic corpora. Dependency parsing is also developing in the direction of multi-lingual parsing where a single system is required to be successful with different languages. This research may bring us closer to understanding the linguistic capacity of human brain, and thus to building better NLP systems.

KEY TERMS

Corpus (corpora plural): A collection of written or spoken material in machine-readable form.

Machine Translation (MT): The act of translating something by means of a machine, especially a computer.

Morpheme: The smallest unit of meaning. A word may consist of one morpheme (need), two morphemes (need/less, need/ing) or more (un/happi/ness).

Phrase Structure Tree: A structural representation of a sentence in the form of an inverted tree, with each node of the tree labelled according to the phrasal constituent it represents.

Rule-Based Parser: A parser that uses hand written (designed) rules as opposed to rules that are derived from the data.

Statistical Parser: A group of parsing methods within NLP. The methods have in common that they associate grammar rules with a probability.

Treebank: A text-corpus in which each sentence is annotated with syntactic structure. Syntactic structure is commonly represented as a tree structure. Treebanks can be used in corpus linguistics for studying syntactic phenomena or in computational linguistics for training or testing parsers.